Improved chipset performance and efficiency enable crucial vector operations for AI,ML and other data-intensive applications

REDWOOD CITY,Calif.,Oct. 30,2024 --MinIO,the leader in high-performance storage for AI,today announced compelling new optimizations and benchmarks for Arm®-based chipsets powering on its object store. These optimizations underscore the relevance of low power,computationally dense chips in key AI-related tasks and demonstrates the readiness and superiority of the Arm architecture for modern AI and data processing workloads including erasure coding,bit rot protection and encryption.

MinIO's work leveraged the latest Scalable Vector Extension Version (SVE) enhancements. SVE improves the performance and efficiency of vector operations,which are crucial for high-performance computing,artificial intelligence,machine learning and other data-intensive applications.

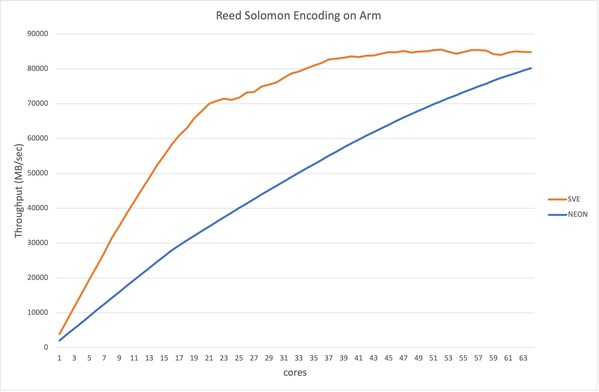

Specifically,MinIO was able to enhance its existing implementation of its Reed Solomon erasure coding library. The result was 2x faster throughput as compared to the previous NEON instruction set implementation,as is shown in this graph:

Reed Solomon Encoding on Arm

Just as significantly,the new code uses just a quarter of the available cores (16) to consume half the memory bandwidth. Previously it required 32 cores to achieve this same memory bandwidth consumption.

Highway Hash

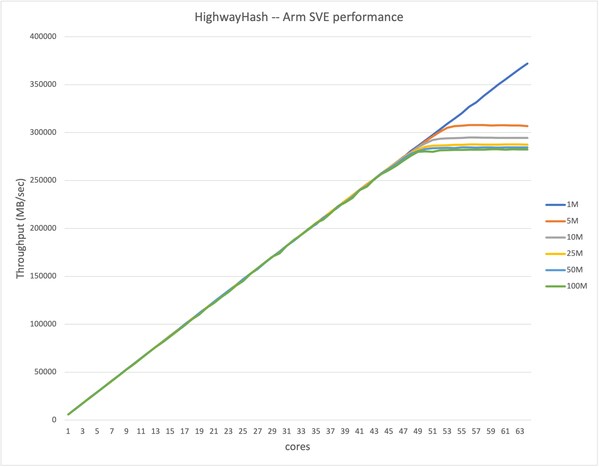

MinIO was also able to achieve significant performance enhancements for the Highway Hash algorithm it uses for bit-rot detection. This is frequently run during both GET (read) as well as PUT (write) operations. SVE support for the core hashing update function delivered the following results:

Highway Hash -- Arm SVE performance

The performance scales linearly as the core count goes up,and for the larger block sizes,it starts to reach the memory bandwidth limit around 50 to 52 cores.

Further,SVE offers support for lane masking via predicated execution,allowing more efficient utilization of the vector units. Extensive support for scatter and gather instructions makes it possible to efficiently access memory in a flexible manner.

"Delivering performance gains while improving power efficiency is critical to building a sustainable infrastructure for the modern,intensive data processing workloads demanded by AI," said Eddie Ramirez,vice president of marketing and ecosystem development,Infrastructure Line of Business,Arm. "Performance efficiency is a key factor in why Arm has become pervasive across the datacenter and in the cloud,powering server SoCs and DPUs,and MinIO's latest optimizations will further drive Arm-based AI innovation for the world's leading data enterprises."

The latest generation of the NVIDIA® BlueField®-3 data processing unit (DPU) offers an integrated 16-core Arm-based CPU as part of the network controller card itself. This leads to simpler server designs by interfacing the Non-Volatile Memory Express (NVMe) drives directly to the networking card and bypassing any (main server) CPUs altogether. This represents an ideal pairing for software-defined technologies like MinIO. With 400Gb/s,Ethernet BlueField-3 DPUs offload,accelerate and isolate software-defined networking,storage,security and management functions. Given the criticality of disaggregating storage and compute in modern AI architectures,the pairing is remarkably powerful.

MinIO has been at the forefront of storage innovation,and its latest benchmarks reveal the impressive performance of the Arm architecture in data-intensive tasks. They follow the foundational work undertaken to optimize and benchmark Arm-based AWS Graviton2 processors against Intel Skylake chips. The latest Arm architecture,particularly with NVIDIA's BlueField-3 DPU,demonstrates remarkable efficiency and scalability for storage workloads. This includes advanced functions such as erasure coding,encryption,and hashing,essential for AI data processing.

"As the technology world re-architects itself around GPUs and DPUs,the importance of computationally dense,yet energy efficient compute cannot be overstated," said Manjusha Gangadharan,Head of Sales and Partnerships at MinIO. "Our benchmarks and optimizations show that Arm is not just ready but excels in high-performance data workloads. We are delighted to continue to build our partnership with Arm to create true leadership in the AI field."

To learn more about the benchmark results please seeMinIO's blog detailing the optimizations and testing.

About MinIO

MinIO is pioneering high-performance object storage for AI/ML and modern data lake workloads. The software-defined,Amazon S3-compatible object storage system is used by more than half of the Fortune 500. With 1.7B+ Docker downloads,MinIO is the fastest-growing cloud object storage company and is consistently ranked by industry analysts as a leader in object storage. Founded in November 2014,the company is backed by Intel Capital,Softbank Vision Fund 2,Dell Technologies Capital,Nexus Venture Partners,General Catalyst and key angel investors.

CONTACT:

Tucker Hallowell

Inkhouse

minio@inkhouse.com